My musings, findings, experiments and help related to PHP, general web development and pretty much anything else

Monday, November 23, 2009

Chrome OS: The big misunderstanding

Monday, October 26, 2009

Jaunty was Canonical's Vista

What really was the major issue with Jaunty was a newer version of the X server that eschewed the proven track record of using xorg.conf files for the auto-generated X configurations. Great idea but didn't work so well, especially for folks forced to use older graphics drivers or even anyone using ATI graphics cards and ATI's proprietary drivers. For myself, with an ATI card installed at home, it meant I had to do some rather tedious work arounds to get my graphics card working on Jaunty.

Another issue was the switch to Pulse audio being the default sound sub-system in Jaunty. Again, Pulse is great technology but people just weren't fully prepared for it. To this day I still have sound issues with my USB sound card "dongle" for my headphones, as well as issues with sound from Flash video in Firefox.

With Karmic, it looks as if the Ubuntu developers have not messed around with systems so dependant on outside support such as the graphics and sound systems. Massive improvements to the startup systems though have meant I have boot times of around 5 to 10 seconds (yes you read that right).

Jaunty was, however never an out and out disaster like Vista was for Microsoft. It had its issues, but with a 6 month release cycle, as opposed to Microsoft's years of development, things can get back on track very quickly, and Karmic sure seems to show that they are.

One of the big advantages of agile, open source development that Canonical has over Microsoft.

Wednesday, October 14, 2009

PHP Session write locking and how to deal with it in symfony

So as my comeback article I thought I would write on an issue that was plaguing us in development of Pinpoint and how, thanks to help from the great symfony community at the symfony users mailing list, we got it resolved.

The problem

With Pinpoint 2, a symfony based application we have developed here at Synaq, we employ quite a lot of Ajax requests in order to make the interface more responsive and less bandwidth hungry; why reload an entire page when you really only want a small sub-set of that page to change? A problem came about when we had an Ajax request running, and while this request was waiting for a response from the server, if a user clicked another link, that link would not "process" until the previous Ajax request had completed. What this meant to us was that it seemed that our requests were "queuing" instead of working asynchronously as they should.

On one particular section of Pinpoint, we have a number of Ajax requests loading at once, each one interrogating the database for data. Each of those requests "queued" behind each other, and any attempt by the user to go to another module resulted in waiting for each of these queued requests to complete before the browser would process the users interaction.

The cause

After going through all sorts of different possible fixes, none of which worked, I eventually submitted the above problem to the symfony users mailing list. The response that came back was that it probably had something to do with PHP session-write locking.

PHP manages sessions, this anyone who codes in PHP knows, and in order to ensure that session data does not become corrupted between requests, PHP will lock write access to the session files for a user while it is processing a request. This results in the following process if you have multiple requests coming through:

1. Request comes into server, and PHP locks session files.

2. Another request comes in but cannot access the session files because they are locked.

3. The first request processes, running all SQL, processing results, etc.

4. Yet another request comes in but cannot process because session files are locked.

5. The first response is finally finished, sends its output back to the calling function and unlocks session files.

6. The second request begins processing, locks session files and continues to do what it needs to.

7. Request three is still waiting for session access.

8. Yet another request comes in but ..... I think you get the picture.

The solution

The only way to resolve this issue is to force the requests to unlock the session files as soon as possible. Thankfully symfony has its own user session storage classes that make this incredibly easy.

The one problem is that you cannot release the session lock until after you have saved data into session that needs to be saved. Our solution was that for each action that processes an Ajax request, write everything as soon as possible to session that needs it and then unlock session to allow any other request to begin processing.

We hit a roadbump. Using symfony's $this->getUser()->setAttribute() command to store session data, we then used PHP's session_write_close() to force PHP to let go of the lock and let the next request begin work. This did unlock session but we noticed that all the data allocated to session using $this->getUser()->setAttribute() was not saved.

After a little exploration of the symfony classes we noticed that when the setAttribute() method is used, in order to speed up processing, symfony does not immediately write to the global PHP $_SESSION variable. Instead it keeps those values in an array until the end of script execution and only then writes to session. Using PHP's session_write_lock() we pretty much made it impossible for symfony to do this because to prevent session data from losing concurrency, PHP does not allow a script to write to session if the session was unlocked.

We did, however, find another method: $this->getUser()->shutdown(). This forces symfony, when the shutdown() method is called, to write session data into $_SESSION and then it also runs session_write_close() itself.

The end result

We now have actions that process Ajax requests and once all data has been sent to session using $this->getUser()->setAttribute() we run the $this->getUser()->shutdown() method. The difference was incredible and has actually speeded up our entire application a ton.

One thing to be careful of however. You do need to be sure that you call that shutdown() command at the right time, because if you call it too early, session data will not get saved and PHP will just ignore it. We had to reshuffle some code so that all the database calls and data processing functions were run after shutdown() as well.

Thanks again to the symfony community for helping to point this out and hope this helps others who may have the same issue as well.

Wednesday, July 22, 2009

Warcraft Movie is going to ROCK!

How do I know its going to be amazing?

1. The franchise lore

The storyline that accompanies Warcraft and the events that play out in its plot all the way from the 1994 RTS game to the current WoW are a move makers delight. Full of action, intrigue, drama, wars, treachery and love stories.

2. Sam Raimi

When Blizzard first publicly expressed an interest back in 2007 about making a Warcraft movie, Uwe Boll approached Blizzard saying he could direct for them. With this directors serious lack of success at game to movie conversions (anyone remember Street Fighter?), Blizzard pretty much laughed him out and told him where he could stick his offer. They then went and selected, in my opinion, one of the industries finest action/fantasy directors. Pretty much anything Sam has directed has ended up golden.

3. Blizzards neurotic caution with its IP

Blizzard has a very big reputation for ensuring that the quality of anything related to its IP is stellar. For evidence, look at Starcraft Ghost, a game that was supposed to be an FPS based version of the Starcraft universe that was never launched because Blizzard thought it wasn't good enough. The expansions for World of Warcraft were also supposed to only take a year each and notoriously took a lot longer because Blizzard wanted to ensure a quality product. Starcraft 2 as well was supposed to be released last year but was delayed because again, Blizzard wanted it perfect.

I have no doubt they will employ the same tactic with the movie and not allow it to be released if it ends up being a load of crap.

There have also been numerous comments around by people who are not as in love with the Warcraft universe as I am, or the 11 million other players of WoW, and believe that only die-hard fans will watch it. Personally I don't think this is the case. The same was said about Harry Potter and yet more people have watched the movies than have read the books or even knew about the books. The lore and storyline that exists within the Warcraft universe is so broad and appealing that it should be a great movie to watch regardless of if you have ever seen or taken part in the games.

So come release day I will be at the front of the ticket buying queue and happily settle down in front of the big screen with popcorn and drink to be absorbed in a universe I have come to love.

Lok'tar Ogar!!!

Tuesday, July 21, 2009

RIAA needs to follow the example of other industries

One of my fondest memories here in South Africa, was writing an article to a local IT publication, discussing how the latest movie titles can be bought at almost any street corner for about half what they cost in the stores, and that the best way that the movie industry could combat these pirates was to drop pricing and so make it unprofitable and too risky for these operators to continue. A few months later, genuine DVD titles in shops dropped from R300 each to a paltry R150 or thereabouts. Now, even if you went looking, the street-side vendors selling their pirated versions have all but vanished.

Combine this kind of pro-active approach to keeping your physical stock moving with online content-delivery platforms, and the amount of news I read about the movie industry losing out to pirates is so minimal its more like background noise.

Moving onto book publishers, ebooks could have been a real threat and could have resulted in publishers employing tactics similar to the RIAA. Instead, you can now buy ebooks online for a fraction of the price of physical books themselves. And developments like the iPhone and iPod Touch (amongst others) make consuming an ebook that much more pleasant. While devices like the Kindle by Amazon and now Barnes & Nobles own ebook reader also point to the book publishing worlds flowering digital transformation, I still think these large, expensive devices are ridiculous, but that is just a personal opinion.

The RIAA on the other hand seems convinced that the only way to continue in a world where people want their content digitally, is to deny them that and enforce draconian and, to be honest, ridiculous measures to preserve a business model that is so behind the times its laughable. People are already buying music online on a per track basis. The RIAA, and the companies it apparently attempts to protect, need to realise that the days of the "album" are nearly at an end. People want songs not epics. People do not want to be forced to buy a physical storage medium like a CD that contains 12 songs when they are only interested in 1.

Lastly, if the RIAA is not careful, they may find themselves defunct, along with the record companies they represent. With the explosion in online presence by artists and the ease with which anyone can publish content online, even music, recording studios that sign up artists as has traditionally been done, may no longer be necessary. Artists generate the majority of their own income by touring and royalties, not record sales. Record sales only help market the artist to a prospective audience and line the recording studios coffers. But when an artist has access to an all pervasive medium like the Internet as well as the multitude of portals to market their own content (YouTube, etc), they may realise they don't actually need a recording studio anymore. Artists may dictate to marketing agencies how they want their content promoted instead of the other way round.

Monday, July 20, 2009

PHP Security Tips

The following tips I have arranged in order of what I feel can be the most dangerous. If anyone has any comments please feel free to post them up and share with everyone else.

1. Command line commands

PHP has a great amount of power, and that power extends to the servers command line itself, allowing you to execute shell commands to the server directly from within the script you are coding. I have seen some coders liberally pepper their code with the shell_exec() function or equivalent PHP functions without a seeming care for what they may be opening up.

My first tip here ... don't use these functions! That's right, if you have no critical need to actually run a command on the servers shell then just don't do it. Rather take the time to figure out another way, rather than potentially open up your server to an attack through this vector.

Now granted that there are numerous ways that the server itself can be setup, as far as PHP's rights as a user to access certain server functions, and these should be enabled anyway. These are topics for a system admin and so are out of scope of this article, but why run the risk anyways.

Secondly, if all other options are exhausted and you absolutely have to use the shell, then please remember to clean up all data being run. As an easy way to clean, you can use PHP's own escapeshellarg() function which can clean some obviously shell-like stuff from any input, but this is also not good on its own.

Another tactic is to avoid using user submitted data as part of the shell argument. For example you can let a user choose from a few options and then run a corresponding argument in shell that was pre-written, rather than add a users submitted data in the argument.

2. Variable variables

Like shell commands above, just don't use them! They are a very big potential risk security-wise and there is almost always an alternate method to using a variable variable. For those who are not sure what I mean, PHP has the ability to create variables based on the value of another variable, for example:

$foo = bar;

$$foo = "Hello";

echo $foo.'\n';

echo $bar.'\n';

echo $$foo.'\n';

What would be echoed is:

bar

Hello

Hello

Now imagine a hacker figures this out and is able to submit user data to overwrite one of your own variables with his value and so compromise your system. The consequences, depending on where its been used, can be very dangerous.

Other ways to make using this more secure? There aren't any. Just don't use them!

3. Clean, clean, clean those submitted values

We all know the $_SERVER, $_COOKIE, $_GET and $_POST server variables. Those oh so convenient variables that store all that lovely URL and form data that a user submits. But it is shocking how many developers go straight from using that user input as is! Values submitted in URL's and forms by hackers is the number ONE vector of attacks on web applications and therefore should get the biggest attention when you are developing applications. Great PHP frameworks like symfony will escape arguments from forms and URL's as a matter of course and are therefore great, but this doesn't mean you are still safe.

SQL injection is the biggest attack through form submission and is really quite preventable. Functions such as mysqli_real_escape_string() are great for ensuring that posted data is escaped properly before it hits the database query level.

Another to check for, and this applies to framework users as well, is to properly validate your data. Frameworks can do some basic checking on data but are not complete as they tend to be very generic, validating for the sake of "is it a string", "is it x characters long", "is it an email address" etc. Fields like First Name in a form for example can be validated further. Does the name have any spaces? If so, it is incorrect. Does it have any numbers? If so it is invalid. Again, these just help prevent any possible vectors of attack.

These are just a few of the very large security risks to keep a look-out for while developing. While this isn't a complete guide by any stretch of the imagination on PHP application security, hopefully people may find the information here useful.

If you have any comments or extra tips to share, feel free to add your comment.

Tuesday, July 14, 2009

Mouse gestures with KDE 4.3

Sure, mouse gestures themselves are nothing new and have been used in the likes of the Opera browser for years now, but to have an operating system supporting this feature is a great step as it just increases the usefulness of the feature ten fold. This post is just a quick demo to show how to setup and use mouse gestures. Remarkably very easy.

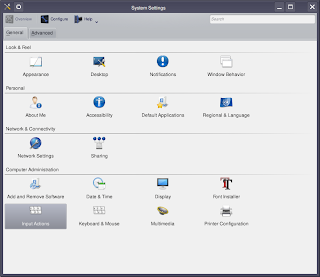

First of all, you need to get to the System Settings panel. Press Alt+F2 and type "System Settings" or go to the Application Launcher >> Computer >> System Settings until you see this window:

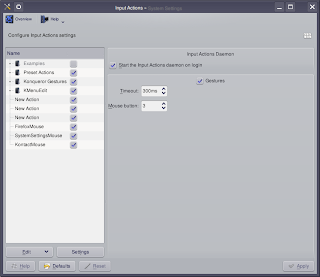

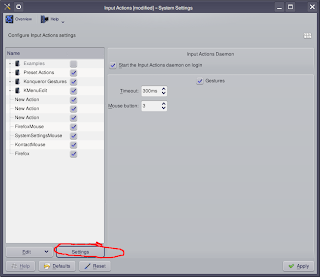

Now open the Input Actions applet and you should see:

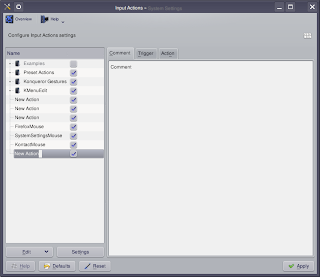

Along the left side there is a tree menu. Just right-click on the blank space there and navigate to New >> Mouse Gesture Action and click on Command/URL. You will then see:

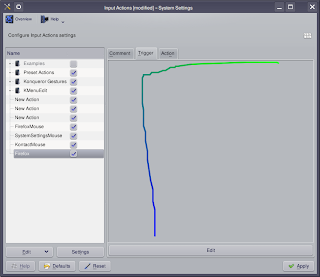

Enter the name Firefox. On the right side, click the tab near the top labelled Trigger so that we can set what mouse action will open Firefox. Click the Edit button at the bottom and a new window pops open. Hold the left mouse button down and draw whatever mouse movement you want to use to open Firefox. In my case I did an upside-down L or rather an F without the little line. Generally you want to keep the gestures as uncomplicated as possible. You should now see:

Click the Action tab at the top and in the Command field type in "firefox" (minus the quotes of course). Hit the Apply button and its setup.

By default, mouse gestures work while holding the right mouse button down. So hold your right mouse button and draw the gesture you decided on anywhere on the screen. In my case I will draw my upside-down L. Firefox should now open. You can of course change which mouse button needs to be used; especially useful if you have a multi-button mouse.

Under the tree-menu click the Settings button. You will see it says that mouse button 3 is selected. 1 is the left button, 2 is the wheel and three is the right mouse button. Change the number, hit apply, test your gesture with the mouse button you want use and if it doesn't work change it again. Eventually you will get the correct number that corresponds to the mouse button you want to use.

There are other options as well for mouse gestures. For example, by exploring the DBus option, I was able to figure out how to use a mouse gesture to allow me to switch desktops easily. This is a very advanced feature however and I'll put together a little demo on how to use DBus and mouse gestures in another post if I have time. For now, enjoy the ease-of-use that this advanced new feature of KDE 4.3 gives you and have fun. :)

Thursday, June 11, 2009

Why Linux will take over from Windows

To sum it up briefly, Linux's open source, distributed development by a group of passionate developers has a far greater longevity than Microsoft's hierarchical, closed-source development driven by money. But why does this make such a big difference? First lets look at the two groups drivers and what makes them do what they do.

Microsoft develops Windows as a closed-source proprietary and, most importantly, commercial product. Microsoft is a corporation and therefore needs to answer to share-holders, governments, anti-trust law makers, and other businesses. The biggest reason Microsoft develops Windows is to generate revenue. They employ developers to work on the project, and so monetary cost affects the skills and numbers of developers they can acquire. who are instructed as to what they should develop with Microsoft management guiding the direction and mission (in a holistic sense) of the design and development. They are under pressure to develop products with strict timelines in place and investors to satisfy.

Linux is developed by an organisation of non-profit developers. The developers who work on the project volunteer (for the most part) and so are driven not by the pay-check but by a passion for what they do. Generally speaking, people can select what they want to work on and what best fits their own skill sets. In addition, being open-source, anyone can get involved, and in fact they have their entire user-base available as potential contributors. Naturally not all can be developers but contributions can be made many ways. Linux does not need to answer to shareholders to justify its releases. Goals for a specific distributions release are decided upon communally so features get completed that the users actually want. Linux does not want to make money from sales of the operating system so there are no problems with anti-trust. Commercial backing, while helpful, is not necessary so Linux cannot go bankrupt or suffer cashflow problems (very applicable in the current uncertain economy) .

Its pretty much because of these differences that Linux distributions have an edge over their commercial counterparts. Microsoft, because of its reliance on being commercial, could go bang at any time. We don't know what goes on behind the closed doors of the Redmond giant. Who knows if Microsoft will be the next Exxon? Perhaps the money troubles at Microsoft are larger than they let on? Or it is possible that some new form of anti-monopoly legislation shoots them in the foot and makes it difficult for them to continue developing Windows the way they are. Who knows? We can't tell because everything at Microsoft is closed off to us and they rely on dollars, not contributors, to keep ticking. If Microsoft suddenly shut its doors, all those Windows users would be left high and dry because Windows is closed source. No one can pick it up and carry on.

Even if the financial world collapses totally, Linux wouldn't die. Being developed by passionate contributors they do not rely on a pay check to keep working. Even if a single Linux distribution nabbed 90% market share, anti-trust is not an issue because it is open-source and freely available, there isn't any direct income generated from sales of the distribution. And because of its open source nature, even if every single core developer for a Linux distribution left the project, the community behind can still keep developing it. Anyone can pick up the source code and continue development. Users wouldn't be shafted!

A timeline? I have no idea. Like I said, this is a long term prediction. It may take 2 years, may take 10. But eventually the time will come where Microsoft can no longer compete development-wise and the crown will start slipping, perhaps dramatically, perhaps over a slow, accretionary process of user disgust. Only time will tell.

Friday, June 5, 2009

Microsoft's new babies don't play well together

I have Windows 7 installed on my home machine and have been giving the RC a spin over the last few months (before that the Beta as well). And when I attempted to use Bing on Firefox in Windows 7, I noticed that most of its flash (literally) and pizaz were missing. I didn't get the little mouse-over effects on the Bing homepage nor did I get a link to search for Video in the options. Thinking that this was probably a quirk of Firefox on the Windows 7 RC, I opened Internet Explorer 8. Low and behold, I had the same problem.

My next port of call was the Adobe Flash plugin, as Bing seems to use that for its homepage effects as well as for the video search pages. I checked and the plugin was actually installed both on Firefox and IE 8. To make sure that I hadn't missed something obvious I uninstalled the plugins for both browsers and reinstalled. Still no joy. It seems that Bing is unable to detect that IE 8 or Firefox on Windows 7 actually have Flash installed. Either that, or it checks for a supported operating system and Bing just hasn't got Windows 7 on the list yet.

Microsoft's newest baby Bing, seems to not play well with its other baby, Windows 7. So they cannot even make sure their own products work well together.

One consolation. I have been using Bing on Kubuntu 9.04 with Firefox 3.0 with absolutely no problem. At least they are supporting the rival OS......

Tuesday, June 2, 2009

Microsoft's Bing is cool but....

Bing is actually really good. It eschews Google's philosophy of simple and clean by making the search interface more attractive. It keeps a search history so you can always refer back to what you searched for before which in itself attracts me as I constantly need to re-search for things. One of its greatest features is the mini-preview of a site you can get by hovering on a link to the right of the search result which gives you a quick run down of what that page is about and relevant links on that page that may be useful too making getting to what you want on that site relatively easy. See the pic showing the search results for Synaq and the preview of the first result.

So why the but in the title? Bing will probably get blocked at pretty much any organisations firewall level, perhaps even at home. The simple reason for this is because Bing also allows you to preview video results by just hovering over a video thumbnail. The video itself actually plays in the search results window as you hover over it, which is a great way to preview video but can allow people to bypass firewall settings that are supposed to block things like porn.

Techcrunch have already written about this and it can mean bad things for Microsoft's goal to grab market share. Hard to do that if schools, corporations and any other organisation providing people Internet access over its network block access to Bing. The problem is because most organisations filter on a per site basis. Bing circumvents that with its video preview feature acting as a kind of proxy to these not-so-safe sites. The feature is great and makes finding that video you are after even easier, but human nature will abuse it and already has started doing so.

Sure there are other ways to filter at a firewall like if the url contained certain search strings. But then someone has to maintain a growing list of potential search terms that people can use to try and get results from Bing to satisfy their craving for the hardcore. Its a lot easier to just add www.bing.com to a block list and will probably end up being the norm unless Microsoft can come up with a better way to do this.

Bing does have a safe search setting, like Google, but its a matter of two clicks to disable it. One way Microsoft can help alleviate this issue is to include the safe search setting (full, moderate, off) into the url as well with each web request. A firewall can then filter based on that, allowing people onto Bing if their safe search setting is on full.

Who knows though what the big Redmond will end up doing. I'd actually hate to have that video preview ability removed because it is really useful, especially in a place like South Africa where bandwidth is still at a premium and being able to preview a video quickly for a few seconds before loading up the entire host site is advantageous to the bottom line.

Sunday, May 24, 2009

Symfony ... Good for ALL your projects

I can understand why Fabien would make a comment like that. To get going with symfony can be a little bit time consuming and to get to grips with its architecture and how to "code for symfony" can again take some time. But if you have already used symfony and learnt how to use it or plan to use it for all your projects, then those disadvantages fall away.

There is another reason why I feel symfony is great for even the small projects. How many "small" projects actually stay small? How many times have you started work on a project that is supposed to take only a few weeks at most to finish and it ends up still in active development months later? The problem with starting any project with the mind set that its only a small one is when it suddenly grows to be a rather large application, extensability and maintenance starts to become, well, a little nightmarish.

If you start a new project, even a so-called small one, with symfony, the abstraction required for good extensibility and maintainability is enforced on you. If this project suddenly grows its not a problem because everything is already setup to allow it be expanded.

An example is here at Synaq, one of our Senior Linux Technicians, a guy who usually works on setting up new servers, was asked to create a simple little interface for a Small Business Firewall product we are developing. This application was only really supposed to pull basic info into a simple interface for a customer to read. The problem is that now, more functionality than was originally planned needs to be integrated into this little app and it now needs a database backend to accomplish that. If the project had been started with symfony, it would have been a simple case of creating the database itself, sending a couple of symfony commands to generate an ORM model to interact with that database and 90% of the work would have been done.

After chatting with Jason, the System admin developing this application that used to be considered small, and explaining symfony, he is thinking of migrating the application to it. Ajax was another example. Some of the functionality that was added to the requirements of this little application was that data be updated on a few pages every few seconds. This meant that Jason now had to learn the Protoype library. With symfony he could have just used the built in helper functions to accomplish the same thing.

The biggest problems with "small" projects is what people don't forsee. A lot of the time these projects end up growing in requirements and suddenly turn into large, unwieldy projects that are difficult to maintain and extend with new functionality. By using symfony you may have a little slow down (perhaps) in the beggining, but you will end up with a far more robust and useful framework around which you can almost infinitely extend into the near future.

Tuesday, May 12, 2009

Why Blizzard rocks!

The real reason I love them is that while most games development houses are so focussed on the latest and greatest graphical punishment for your PC's hardware, Blizzard will work out the lowest common-denominator when it comes to hardware specs and then focus on that level of graphical grunt. No need for the latest price busting graphics card to play World of Warcraft or Warcraft 3. And the good news is that their upcoming releases, Diablo 3 and Starcraft 2 will follow the same trend.

Instead of graphics, Blizzard focus on what is, to me at least, a more important aspect; gameplay. A game can be absolutely drop-dead gorgeous in the eye-candy department and yet still totally suck as far as enjoyment is concerned. I played Crysis. Gorgeous game, with everything looking so incredibly realistic it was jaw dropping. But after the jaw was picked up off the desk and glitz fades away what was left? A game that was, well, average in my humble opinion. I never finished Crysis because it bored me eventually.

Blizzard's focus on aspects such as playability and plot means that its games far outlast those that focus primarily on visuals. Look at Starcaft as an example. There are professional (yes, people get paid) Starcraft leagues and tournaments in Korea with huge attendances. Not to mention television rights as well. This is from a game that was released in 1998 for goodness sake. World of Warcraft is another example. Graphically WoW is slightly aging, and yet it still manages to enthral a massive audience (well over 11 million subscribers now).

What this also means is good news for Linux gamers. I play WoW on Kubuntu (my Linux distribution of choice) and it runs better in Linux with Wine than it does in Windows XP. Recently I found my Starcraft CD's from back in the day and they played too. Diablo 2 also does as well as Warcraft 3.

This is absolute brilliance by Blizzard. Not only are they ensuring that the largest possible group of hardware configurations on PC can play their games, but they are turning every Linux user into a fan at the same time by their decisions to not be "cutting-edge" in hardware needs.

I just hope that with the soon to be released Starcraft 2 and Diablo 3 that they haven't forgotten that and that us Linux users will be able to help contribute to Blizzard's bottom-line by spending our money on their great games ... and then play them for the next 10 years. From what I have seen of the video's and screenshots, this does happily, seem to be the case. Only time will tell.

Monday, May 11, 2009

The Kindle: Whats the point

I certainly am not going to pay nearly $500 for something that will let me read an e-book. In South Africa that's close to R 5000, enough to get yourself a reasonable desktop PC. If I really had to have a device to read e-books on the move I would rather get myself an iPod Touch or even go the whole hog and get an iPhone. the i devices can also allow you to read books but adds music player, wifi access, 3G Internet and a whole lot more.

But even the iPhone. Do we really need a device like that? Or many of the other gadgets and paraphernalia that clutter our lives and the shelves of electronics stores. Sure it looks gorgeous, and if you're a travelling salesman who needs access to your documents, emails and the web while on the move, then I can see it being a good tool. But for most people who go to work and work in an office; you have a PC on your desk. Then you go home inthe evenings; you have a PC (or laptop) there as well usually.

I am not one of those gadget freaks who must have the latest and greatest just because everyone else does. I will not buy an iPhone (even though I am enamoured by its capabilities and sexyness) because for me the vast majority of its features would just be a toy. I don't own a PS3 or XBox 360 because my PC at home has enough gaming potential to keep me more than happy.

Why do we allow our money-spending decisions to be dictated by fashion? Who cares what the Jones' have or want? Is our society really so driven by the materialism-as-status mindset that, like someone I know, they will put themselves into debt just to keep up with "everyone else"? Questions I don't have the answer to, unfortunately.

A fantastic I was pointed at a while discusses our materialistically driven society and how that affects the world we live in. The Story of Stuff is a fantastic narrative about what our society's impact is on the world we live in and how we cannot keep wanting wanting wanting. Take a look and tell me that its not at least a little eye-opening.

Next time you watch that advert about the latest sexy gadget that really adds no value to your life apart from you being able to say you have one, ask yourself if buying it is really what you need in your life. If in owning it it will change your life for the better or if it will merely be an ornament.

The Amazon Kindle? Last I heard books were available on this fantastic technology called paper for a 10th of that price. Perhaps we should buy more of those and fill book shelves. Or maybe I'm the odd one. If thats the case ... then I guess just ignore this blog post....

Wednesday, April 29, 2009

Don't forget your Bitwise Operators

Edit After getting some comments about this post I realised some people might want a little intro into what Bitwise operators are. A great tutorial on it for PHP can be found here

I have had discussions before with other PHP developers, and in fact with developers in general, geeking out about ways to get things done in our respective languages etc. One thing I noted from these chats is that the knowledge of Bitwise operations, and how they can be used to create cleaner, more efficient applications, seems to be lacking. So I thought I would take the opportunity to point out one way that we are using Bitwise operators to make our jobs a little easier here at Synaq in developing Pinpoint 2.

A little bit of a history. Pinpoint 2 is our own development to replace the aging Pinpoint 1 interface which is based on the widely used, open source Mailwatch PHP application. Essentially it is a front end interface for the Mail Security service we provide; scanning companies mail on our servers for viruses, spam, etc, before forwarding the clean mail onto the clients own network. One thing that the old system (and of course the new one) needs to do is store classifications of mail. Some of the types they get classified as are Low Scoring Spam (i.e. probably spam but a chance that it could be clean), High Scoring Spam (i.e. definitely spam with a very slim chance that is clean), Virus, Bad Content (eg. the client blocks all mail with movie attachments), etc, etc. The old Pinpoint 1 based on Mailwatch uses a database schema that stores a 1 or 0 flag for that specific type. As a simplified example:

- is_high_scoring: 0 or 1

- is_low_scoring: 0 or 1

- is_virus : 0 or 1

- is_bad_content: 0 or 1

So for Pinpoint 2 we decided to reduce all those classification columns into one and assign each classification a bit value. For example:

- if clean: classification = 0

- if low scoring: classification = classification + 1

- if high scoring: classification = classification + 2

- if virus: classification = classification + 4

- if bad content: classification = classification + 8

- if something else: classification = classification + 16

- if another something else: classification = classification + 32

2 + 4 + 8 = 14So in our classification column a value of 14 is stored. If we now want to in our interface check the type we do not have to access multiple columns and determine if it contains a 1 or 0 but instead retrieve one value and work our bitwise operators on them. For example with Propel in symfony, if we wanted all messages that were viruses:

$mail_detail_c = new Criteria();

$mail_detail_c->add(MailDetailsPeer::CLASSIFICATION, 4 , Criteria::BINARY_AND);

$virus_mail_obj_array = MailDetailsPeer::doSelect($mail_detail_c);

We now have an array of results with all messages that are viruses. If we wanted all messages that were viruses AND high scoring spam:

$mail_detail_c = new Criteria();

$mail_detail_c->getNewCriterion(MailDetailsPeer::CLASSIFICATION, 4 , Criteria::BINARY_AND);

$classification_criterion = $mail_detail_c->getNewCriterion(MailDetailsPeer::CLASSIFICATION, 4 , Criteria::BINARY_AND);

$classification_criterion->addAnd($mail_detail_c->getNewCriterion(MailDetailsPeer::CLASSIFICATION, 8, Criteria::BINARY_AND);

You can see from all this it is a lot easier to write dynamic queries using bitwise operators than it is to try and add new columns to a schema everytime you add a new classification type.

Wednesday, April 22, 2009

Matt Kohut. A man in need of an education

“There were a lot of netbooks loaded with Linux, which saves $50 or $100 or whatever, but from an industry standpoint, there were a lot of returns because people didn’t know what to do with it,” he said.There is no way to verify whether this is true or not but lets assume it is. The simple reason why no one knows what to do with it is because the world is so ingrained into using Windows that they have no idea that there is something else. They see something different and they think it is immediatly inferior just because it is not familiar. This is, of course, speculation on my part so lets move on for now.

4 months ago my fiance moved in with me. Her computer was flaky as hell because Windows XP did not like her hardware for whatever reason. The problem is that because no one except Microsoft can see source code there were no guides to help fix her problem on the web so it was either spend a ton of cash or try another Operating System.“Linux, even if you’ve got a great distribution and you can argue which one is better or not, still requires a lot more hands-on than somebody who is using Windows.

“You have to know how to decompile codes and upload data, stuff that the average person, well, they just want a computer.

“So, we’ve seen overwhelmingly people wanting to stay with Windows because it just makes more sense: you just take it out of the box and it’s ready to go.”

She did still want Windows for the familiarity and a "safety net" so we started off by reformatting her drive into two chucks for a dual boot configuration. We installed Windows. Four hours and three restarts later, Windows was up. But this was a pre-SP1 disk she had with her machine so we had to install our own firewall, antivirus and a trove of other "security software" before we went online to install Windows Updates.

Whew! That done we decided to install Ubuntu on the other partition. 45 minutes later she was looking at her Gnome desktop. Her 4 year old printer worked out the box, scanner, the lot.

Then the other day she wanted to get her favourite old game Dungeon Keeper 2 installed so that she could play for a bit. I suggested she just boot into Windows XP cos it was more likely to run. I suggested this simply because I was busy at the time and didn't want to have to go through the hassle of trying to make a game designed for Windows ONLY to run on Linux.

She pouted at me. She actually dropped her lip in a sullen pout and then, and I will never forget, uttered the words "I don't like Windows". I felt so elatedly happy that I got up and got Dungeon Keeper 2 working. And as a side note, there was no problem getting it working. It installed and ran with no fuss whatsoever.

My point? My fiance uses a PC in her job. She is by no means a computer geek or ultra-savvy. She had to ask how to watch a DVD in Ubuntu. I told her to put the disk in. It loaded and she watched with no problem. Decompile codes (whatever that means anyway) and upload files? I beg your pardon?

Kohut argues that for Linux to be successful on netbooks (or notebooks or desktops for that matter), the open source operating system needs to catch up with where Windows is now.

Ubuntu, as far as an interface goes, exceeded Windows XP and Vista even a year before Vista was released. The combination of Gnome and Compiz or KDE 4.2 blows away anything Microsoft has been able to get Windows to do visually. Stability? Linux has been the predominant server technology keeping hugely complicated web presences and sites running for decades now, so stability is not something to worry about.“Linus needs to get to the point where if you want to plug something in, Linux loads the driver and it just works.

“If I need to go to a website and download another piece of code or if I need to reconfigure it for internet, it’s just too hard.

“I’ve played around with Linux enough to know that there are some that are better at this than others. But, there are some that are just plain difficult.”

A few years ago I tried running a Fedora desktop but I struggled to get my USB DSL modem working to get online. These days? I plug it in. It asks me for username and password, I am online. Thats it. Could it get any easier, Mr Kohut?

“From a vendor perspective, Linux is very hard to support because there are so many different versions out there: do we have Eudora, do we have SUSE, do we have Turbo Max?This is just evidence of Mr Kohut's lack of expertise in the field. Eudora is a mail client (you know, like Outlook?), Turbo Max has nothing to do with software as far as Google tells me. And no .. you don't have to support every distribution of Linux. Pick one or two (Ubuntu and Fedora are two good ones) and support only them. In fact, charge for Ubuntu and Fedora like you do for Windows installations but instead of it being payment for the software make it payment for support that people can actually get. They get the OS for free, you charge for support, and customers actually do get support on their OS.

I am shocked, angry and a little sad too that someone in that position of influence and power can be so dense, clueless and down-right imbecilic. How can you make remarks on a topic that you obviously know nothing about? What also saddens me is that these comments, my own and those of all the other outraged Linux users, will not by read by the majority of users.

Monday, April 20, 2009

Mac Ads seem to be a little presumptuous

And it got me thinking, what reason do people have to switch to Mac? For the stability of the OS? The built in nature of all the applications? The one downside (and the ONLY reason that I keep a Windows XP installation on my home PC) is that gaming, with some exceptions, does not work on Mac or Ubuntu. But then, when you can buy two PC's with the same hardware specs as a Mac and then install Ubuntu on both for nothing and get all the same benefits as a Mac, I still don't understand why people are so drawn to it. I understand that the Mac is prettier to look at but not everyone has R12 000 for the bottom range Macintosh just for eye candy.

The Mac ads seem to point out that PC's suffer viruses. That they have no applications for producing cool movies, pictures, etc. Crash constantly. And now, need massive hardware upgrades because of the operating system they use. Erm. None of that is true if Ubuntu is used. Ubuntu has access to safe collections of applications. In fact, since my switch to Ubuntu as my primary OS, I have never had to worry about finding an application to do what I want. I needed to find a book cataloguing system because I do have a rather large collection and to keep track of the books I still want can be a little hard. Alexandria is a freely available application that took me 5 minutes to locate on one of these collections. For those already using Ubuntu just do a search in your package manager for Alexandria or on terminal

sudo apt-get install alexandria.

I load up my Windows machine and want to find an application to use and its a few Google searches to find an application written by somebody. I then have to hope that this person is on the level and that its safe to use (i.e. contains no viruses) and that the application will actually work properly on my PC and not slow things down too much.

The funniest thing? I play World of Warcraft (I know, seriously geeky, but thats another discussion). WoW actually plays faster in Ubuntu using Wine (Wine is another application to try and make Windows programs work on Linux) than in Windows XP! I couldn't believe it myself but its true. A game made for Windows runs faster on a competing operating system that has to use a translation layer like Wine.

So no .. I won't be buying a Mac because I am not afraid of malware or viruses, I do not have problems finding applications I need and my operating system is not unstable. In fact... I think I can go get another PC. You stick to your one Apple Mac then.

Wednesday, April 8, 2009

What Trac really needs

"Trac is a minimalistic approach to web-based management of software projects. Its goal is to simplify effective tracking and handling of software issues, enhancements and overall progress."Well, I have got Trac up and running. Trac actually relies quite heavily on its command-line client which, to be honest, I have no problem in using. Anyone that develops and/or works on and for a *nix environment is probably more than comfortable using the command-line and probably, like me, finds it far more useful and efficient than any GUI could probably be. There were a few issues however in setting up Trac that I thought I would share here for anyone reading and interested in setting up their own Trac installation.

1. Command Line requires a learning curve

This may seem counter to what I said above but the one advantage a GUI interface has over a command-line is that it is intuitive. With a GUI you can see buttons and prompts beckoning you to use them. With a command line you need to know the commands or ... well you can do nothing. This means that anyone looking to install and run Trac as of now will have to spend extra time learning the, albeit rather basic, commands.

This is alleviated somewhat with a very useful help system as well as fantastic online documentation for Trac, but the fact still remains that that learning curve might put people off.

2. Root access needed

Trac is not a simplistic web application, even though the documentation calls it minimalistic. It requires the person installing to have root access to the machine and is one of the reasons why I am moving to running my own server as opposed to continuing with a shared, managed service as I have done in the past. While it is understandable to some degree because of the SVN integration, again, this requirement will limit the available user-base to those who know how to setup and maintain web servers or have enough dosh to fling around to get their server management company to install it for them.

3. No built-in authentication

Thats right. Unfortuantely Trac does not include its own authentication system, so managing multiple projects for different clients who should not have access to one anothers projects can be a little nightmarish. If you want authentication then Trac expects you to use Apache's own built-in authentication system's (or whichever web server you happen to have installed). This means that anyone installing this also needs to know how to setup Apache in order to authenticate users based on encrypted, password files stored on the server itself and referenced to using Virtual Host settings.

Again, this limits the potential users of Trac to those that are sys-admins or have the money lying around to get someone to do it for them.

4. Lets give Trac a break

I mentioned a few issues I had but lets cut the developers some slack. Why? Well, Trac is only at release 0.11. Yup! They haven't even reached a full release version yet and pretty much what is available is beta-ish. Once you know that Trac development is still steaming ahead and that the "issues" I described above will probably have solutions to make things easier once the development team do incremement that version counter to 1.0, it doesn't seem such a bad deal for a free development management and bug-tracking application. I am pretty sure that in the next few weeks to months we will see Trac become a feature complete system and I cannot wait for that day. So far I am very impressed with what I can do with it and am so glad I stumbled across it a few months ago.

Monday, April 6, 2009

National Skirt Extension Project is a WHAT?!

I honestly cannot think there is anything real about this. If our government really has initiated a project as frivolous as this, then we really do need to be careful who we vote into power in the next election. I can only see this as some form of prank. One person commented on another blog that he actually called the agency responsible who apprently says its for real and their client is the NSEP but that they couldn't go into more details.

I hope this is only some delayed April Fool's joke or an attempt by someone to garner attention and then use that attention to launch some other project or initiative. If any one has anymore information please feel free to share because this is a little scary if it's real....

EDIT: Apparently there is speculation that this advertising is being arranged by UniLever, you know, the household products company. If this really is a viral marketing strategy by a company then I must say they have done good work here. I am actually surprised there is nothing from the government yet to denounce the adverts as nothing to do with them.

Memory caching can be a saviour

Because of the sheer quantity of data we have had to use numerous techniques to try and make the frontend still act at least reasonably responsive when it needs to query the database. Then one day I asked myself "Does the interface really need to query that database so often for data that in essence hardly ever changes?". The scenario is that the interface does not really make many alterations to the data extracted and a lot of the data used is repeated per page for a specific users session. One security feature we have for example is that every user is defined as belonging to a specific Organisation (or Organisational Unit to be technically correct) and every page load requires retrieving this list of Organisations that the current user is allowed to see. This is not likely to change that often and so we came up with an idea.

We use APC, a memory caching facility for PHP scripts, and it also allows you to store your own values through your code into memory explicitly. Thankfully, symfony provides a class that can manage that for us as well, the SfAPCCache Class, that makes using the cache a doddle. Our problem? We need to ensure that the data we store is totally unique.

The solution was to store the results of a database query for our OrganisationalUnits model class into the APC Cache memory. The way we did this was to use the Criteria object for the Propel query as the name of the item to be stored. It stands to reason that if the Criteria object for a specific query is unique then the result will be unique. If the same Criteria object is passed again then the results from the database will be the same as the same Criteria object we passed before. Why query the database a second time?

The APC Cache though cannot take an object type as a name only a string. Easily enough done with PHP's serialize() function. But that string is excessively long (a few thousand characters sometimes) so we need to find a way to shorten and yet keep the uniqueness. So we get the MD5 hash of that serialized Criteria object. There we go. But due to our own paranoia and the need to be 110% sure that we wont by some ridiculous stroke of bad luck create another Criteria object later that against all the statistics of MD5 creates the same hash, we also make an SHA1 hash and concatenate the two hashes. There! Now the chances of any Criteria objects having the same name are so remote as to be nigh-on impossible.

But it doesn't end there. This doesn't help us if we don't know a way to actually add this to the cache and remove etc. For this we go to our OrganisationalUnitsPeer class and overwrite the doSelect method that recieves all calls to run a query onthe database as such:

public static function doSelect(Criteria $criteria, $con = null)

{

$data_cache = new sfAPCCache();

$serialised = serialize($criteria);

$md5_hash = md5($serialised);

$sha1_hash = sha1($serialised);

$complete_name = "organisational_units_doSelect_".$md5_hash.$sha1_hash;

if ($data_cache->has($complete_name))

{

return unserialize($data_cache->get($complete_name));

}

else

{

$query_result = parent::doSelect($criteria);

$data_cache->set($complete_name, serialize($query_result), 3600);

return $query_result;

}

}

Rather simple I thought. We also wanted to be sure that if the user added, updated or removed a new Organisation that the cache would not give the incorrect listing so we added to OrganisationalUnits class (not Peer):

public function save($con = null)

{

$data_cache = new sfAPCCache();

$data_cache->removePattern("organisational_units**");

$return = parent::save();

return $return;

}

public function delete($con = null)

{

$data_cache = new sfAPCCache();

$data_cache->removePattern("organisational_units**");

$return = parent::delete();

return $return;

}

Just doing this to the one set of data has increased our page loads speeds dramatically as well as reducing the load on the server itself as well when we do intense performance testing. We hope to employ this further along with other items that similarly load for each page etc and will never change.

Friday, April 3, 2009

Our background in symfony

One very important design principle I came across years ago was something called MVC; Model View Controller. Essentially what that entails is instead of bunging all your PHP code (and MVC does not only refer to PHP, its a design concept used in lots of other languages) into one file to represent a page, like database connection, running a query on the database, formatting that data and manipulating it, followed by echo'ed HTML to display that data in tables or whatever format is desired, MVC seeks to seperate all the different parts of a web application to make managing them easier.

Model refers to the actual object classes that describe the database schema your data is stored in. Instead of writing your own SQL queries by hand and hard-coding things like database, table and column names, the model is the intermediary. The model is responsible for connecting to the database, generating a query based on parameters you have passed to it, manipulating that returned data and then sending the end result back to whatever called it ready for use.

View refers the actual presentation on screen that an end user would see. The view doesn't care what the database looks like or even if one exists at all as long as it has the data it needs to create the presentation it is supposed to. It will generate the HTML needed for that data the model extracted to be displayed in a way that makes sense to the user.

Controller is the intermediary. It will take the events generated from the View, such as mouse clicks, page loads, etc, analyse what the view has done, decide what the next step will be, such as load another view or ask the model to return more data and then send that data to another view, etc. The controller can be thought of as the glue that binds the model and views together.

Whew! Ok, enough of that lecture. There is one problem with this seemingly clever seperation of tasks. Coding an MVC framework can be a nightmarish task and the complexity of making an MVC alone work can be more effort than its worth. This is where symfony comes in. Symfony is an already pre-built MVC framework for PHP, and while setting up your own MVC structure would be laborious, symfony's is ready to go and using the framework as opposed to writing your own PHP code from scratch actually makes the job even faster than using no MVC at all.

So why is symfony so great? Well, feel free to try it yourself. Symfony's philosophy is convention over configuration which means that, instead of explictly defining the relationships between classes and database schema, for example, that there is an implied relationship. For example, if you had a table called "sales_history", the model class that deals with interacting with that table is called "SalesHistory". Its a convention, we agree to use it this way. It is only if you decide to not use this convention and name your class "SalesMade" that you need to worry about reconfiguring aspects of your code to do that.

Because of this convention scenario you can do the following steps, after having installed symfony, to have a fully working set of database-agnostic model classes ready to use in your application:

- Go to a terminal and enter:

mkdir project_name;

cd project_name;

/path/to/symfony generate:project project_name

- Then go to "/path/to/project_name/config/schema.yml" and define your database structure in the easy to use YAML syntax

- Go to terminal and enter "symfony propel:build-model;"

At Synaq, we have been using symfony for over a year now on a specific, large, and complex project and it has proved invaluable. There has been a lot of learning and experimentation involved in getting to know and use the framework to its best, but the experience has been well worth it seeing how quickly, even with the learning curve, we have been able to produce results.

There is far too much involved with symfony for me to be able to go into great detail here, and I will be giving more information in future on tricks and tips we have learnt while using it. Suffice it to say, if you want to simplify the way you develop large projects, feel free to go give symfony a look.

Thursday, April 2, 2009

A non sysadmin trying sysadmin

In the past as a PHP developer I have usually used servers to host the work I have done that have already been pre-setup, using a shared/managed hosted service where all the necessary server software (Apache, PHP, MySQL etc) is already up and running. This has served me well in the past because I could then focus on making the applications and leave the server administration to those more qualified.

However, over the last few days, I purchased myself a Linode, a VPS, which allows me to install a Linux distro of my choice as well as then go ahead and install my own applications onto it with the eventual aim of being the web and mail server for my domain (which is currently hosted at an afore-mentioned shared hosting company). Doing this has helped me learn an absolute ton about what goes into setting up a Linux, production web server as well as helping hone my Google search skills even further.

The reasons I decided to do this was simple. I needed a server that would allow me root access to install applications such as Trac, an SVN repository and issue tracking, web based application. Also, I wanted more control over things such as the modules of PHP I can install myself and even which version of PHP as well.

For this little project I chose to use the Ubuntu 8.10 (Intrepid Ibex) server distribution. One reason was simply I am used to using Ubuntu as it is my desktop environment as well, and also because one of my colleagues at Synaq (a systems administrator by profession) suggested it, citing that for my limited needs I needn't worry about LTS versions and so on. Included in my little server are the usual applications:

- Apache 2 for webserver

- PHP 5.2. I needed 5.2 because I want to use the new Propel 1.3 ORM in my PHP applications and 5.2 is a minimum requirement.

- MySQL 5.0 as my primary database application predominantly because I am used to it. I plan to also install and experiment with PostgreSQL, amongst others.

- Python, perl and Ruby. All because I plan to play with them too :)

- SVN as my code store and code backup

- Trac (which runs off Python/perl) to use as my Project management and SVN interface tool for a few projects I'd love to make.

- http://www.howtoforge.com/perfect-server-ubuntu8.04-lts-p6

- https://help.ubuntu.com/8.10/serverguide/C/index.html

- https://help.ubuntu.com/community/SubversionInstall

- https://help.ubuntu.com/community/Trac

- http://trac.edgewall.org/wiki/TracGuide

- A whole heap of others I used to help fix small problems here and there that I came up against that the more "official" docs above had not covered.

The best part of this whole experience is going to my new server IP address (I haven't tranferred the domain yet, DNS is the next issue I need to tackle) and seeing it all happily load in my browser....

Wednesday, April 1, 2009

Its all about me.

But enough about the boring history of PHP (I assume boring as when I discuss it with most people the glazed look is a dead give-away), and more about me. I am Gareth ... Oh, you want a bit more? Alrighty then. I am as of the date of this post a 28 year-old, engaged (should earn me some kudos with the little lady), South African guy, currently employed by Synaq, a company that specialises in providing Managed Linux Services to corporations using Open Source technologies. Pretty much were a bunch of Open Source geeks having a blast playing with really powerful machines that handle millions of processes per day. Well, thats what the System Admin's do at least. I am the geek that makes some of the software my fellow colleagues use and even some of our clients. I am the Web Developer. Or rather a web developer because with my compatriot Scott we are the team of two that try our best to write, hack, squeeze, prod, improve, maintain and otherwise maul code into some semblance of what the company wants. Its a nice job ... keeps me kinda busy .. and we get free coffee .. which is a nice perk..

And I am waffling so ONWARD! The reason I created this blog is simply because in my day-to-day work I often find myself sitting with a Great Discovery in my hands, either conjured alone or with Scott, and no one to share it with. While there are sites I could post this stuff onto it somehow feels more like just chucking stuff at the world in general than it does making a contribution. So, I hope to use this blog to share my web development woes and I have a few ideas I hope to get up and running on here as well. One of which is a complete A-Z tutorial of becoming a PHP web developer. All the way from understanding the client-server chain, to installing a testing server on your machine, choosing an IDE and getting stuck into the coding.

Well enough waffle for today. Tomorrow I shall make my first post (or later if I can't contain myself). Thanks for taking the time to read my first piece of drivel and hope you come back